Expert's Opinion

The Price of Interoperability

Contents

The Unchallenged Status Quo

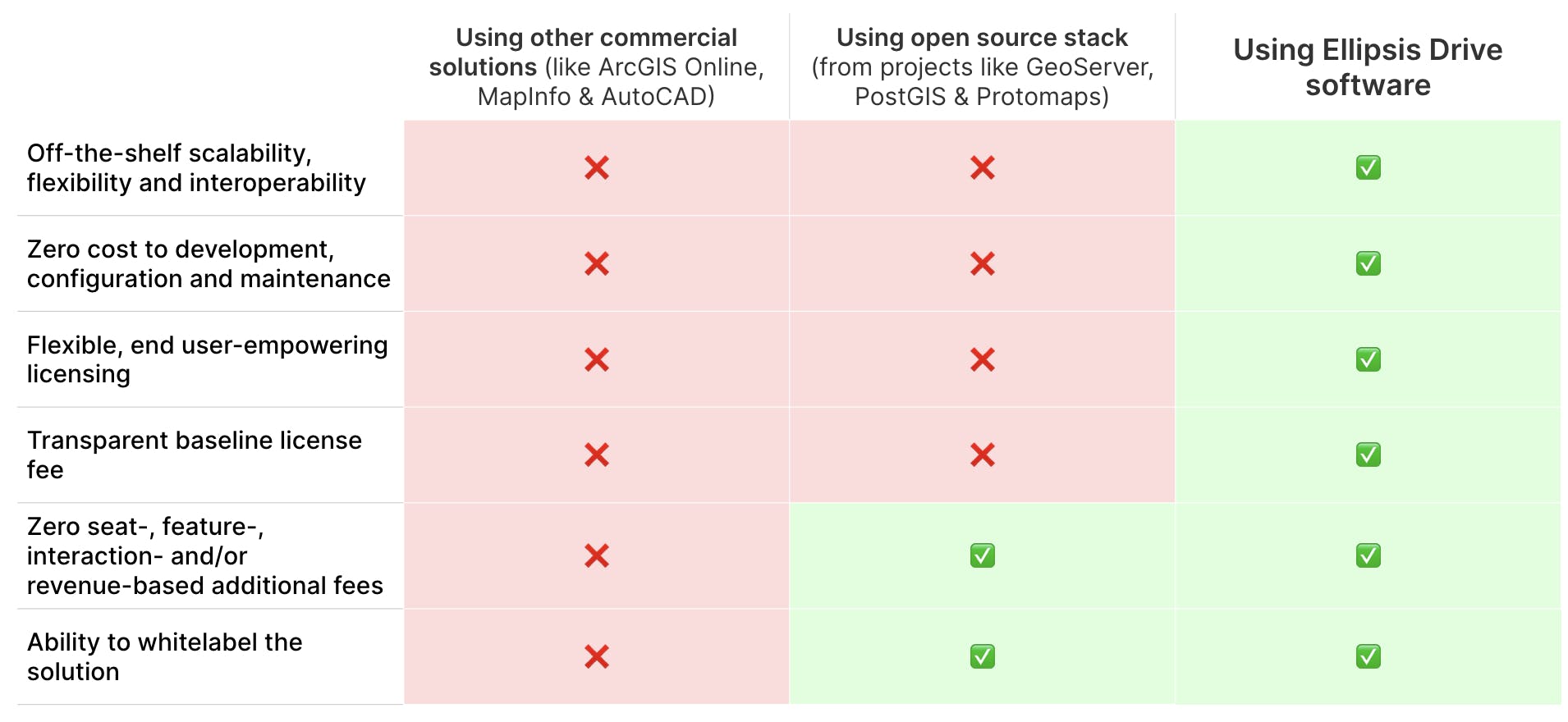

What is the price you pay for having a commercially serviced spatial data infrastructure from providers like ArcGIS (Online), MapInfo, and AutoCAD?

Whilst you gain the benefit of not needing to reinvent the wheel yourself, you’ll be looking at a baseline license fee of around fifty to one hundred thousand dollars per year (give or take depending on team size), which seems reasonable. But that baseline can grow into hundreds of thousands and even millions of dollars if more people need access to the solution, more products are powered by this infrastructure or more features need to be unlocked. More of anything equals more money out of the client’s pocket.

And of course, there is the perpetual and airtight lock-in with your solution provider, which acts as a chokehold on your business operations. Using multiple solutions (from different providers) in parallel is often not in the cards or comes at a very steep price. It almost seems like commercial solution providers want your money all to themselves… (could that be?)

Then what is the price that you pay for having an open source spatial data infrastructure stack from projects like GeoServer, PostGIS and Protomaps?

While gaining several liberties, instead of carrying the cost of a license fee, you pay the price with an inefficient (and potentially very frustrated) tech team that is constantly burdened with maintaining the legacy code of a system that was never designed 1) to scale, 2) to be used the way it needs to be used based on contemporary use cases, and 3) to comply to the data integration and consumption preferences of today’s users and downstream systems.

This results in crashes, low performance, ad hoc patching, data wrangling tasks and (in many cases) external consultancy/development fees to keep the old system from crumbling under modern demands. Not to mention the opportunity cost stemming from this sub-par way of dealing with spatial data. Once again, costs can amount to millions of dollars yearly if we look at the status quo holistically.

The sad joke amidst all this is that these two - frankly unattractive - scenarios are considered ‘normal’. All thanks to a status quo that places a hefty price tag on interoperability, scalability and flexibility (either through license fees or human effort). It’s even more saddening because these 3 things are what anyone would look for in a foundational piece of data management infrastructure (geospatial or otherwise).

For commercial solution providers, most money is made from clients who have fully committed to their product suite. Where migrating away from their system is a hellish experience. It’s SaaS Sales 101. This practice entails -

- Enforcing seat-based licensing (which can easily get out of hand)

- Charging for additional (interoperability) features (which directly blocks you from complying to data management best practices and being future proof)

- Charging for intensity of usage (which can also easily get out of hand)

- Enforcing a revenue share when the infrastructure powers additional products/services/markets (which is a very parasitic practice)

The above practices are still used by many companies, but thanks to an ever-changing digital landscape and an evolving (and vocal!) client base, things are changing FAST.

The Future of Spatial Data Management

More and more businesses, governments and NGOs are realizing that they can promote a fair data landscape, limit lock-in, and be prepared for an unknown future, only when their spatial infrastructure is flexible and interoperable. And with the growing availability of new data sources, analysis methods and endpoints, that sentiment is getting stronger by the day.

What does interoperability imply in this context? Well, in our vocabulary, a spatial data management infrastructure is interoperable when it guarantees seamless access to the spatial content it hosts from every workflow/endpoint/tool simultaneously.

What has led companies to this discovery?

In a first attempt to attain smooth spatial data management – right from the gathering of data from multiple sources and extracting intelligence from it, to disseminating insights and data to downstream applications and systems – many companies are tempted to create an in-house infrastructure. While proactiveness to solve problems is always admirable, a knee-jerk decision to ‘build’ vs ‘buy’ is also flawed to a great extent.

In order to create this foundational piece of data infrastructure, companies pour in a lot of time and resources. They make this investment before their technical teams can dive into the cool stuff (of extracting intelligence and creating mind-blowing data products that can change the world). But the problem with this ad hoc approach is that it doesn’t translate effectively onto the next use-case or project (perhaps due to a change in data source, downstream system or method of analysis). Home-made infrastructure (usually built with only one or two use-cases in mind) is not automatically flexible, scalable or interoperable and costs more than it earns in the medium to long run (when code starts to rot).

This “do-it-yourself” attitude (while being well-intentioned) slowly but surely chips away at a company's margins and focus.

Is there an easy fix? Yes! If you have the right tools and solutions deployed internally, then it is quite easy to have a spatial data infrastructure that can support all use cases and projects.

That is where the future of spatial data management is heading. The future of all technology for that matter. To specialize, collaborate and synergize for better results for all!

What makes a great Spatial Data Management solution?

In the simplest terms, a great spatial data management solution is one that challenges the limitations created by the status quo. Keeping that premise in mind, the solution should not penalize -

- The intensity of data usage

- The availability of data via various endpoints simultaneously

- The number of users/systems/products depending upon the data

The sole focus of a great spatial data management solution should be on providing the best mechanism to manage spatial data flexibly, scalably and interoperably. By pricing such a solution based on data volumes under management, the incentives of the solution provider and its users are (finally!) aligned. When the provider does a good job providing the best data management solution, it will attract more data and more revenue. Additionally, because costs are purely based on data volumes under management instead of charging for each and every feature or interaction, users are not stifled in their own operations. This creates a fair, win-win situation which the entire geospatial industry desperately needs.

Maximizing interoperability should always be the cornerstone of spatial data management solutions that want to be the system of record. Anything else is simply undermining the way in which good business should be conducted.

Conclusion

Interoperability needs to come at a reasonable price, and the only way to do that is with a solution that dares to change the dominant business model. The world needs spatial data to be free from restrictive silos. With more and more industries and professionals using spatial data in their operations, it is time to make true interoperability affordable.

That is what spatial-data-reliant industries are calling for. That is why we are here.

Liked what you read?

Subscribe to our monthly newsletter to receive the latest blogs, news and updates.

Take the Ellipsis Drive tour

in less than 2 minutes'

- A step-by-step guide on how to activate your geospatial data

- Become familiar with our user-friendly interface & design

- View your data integration options